|

|

|

Research Interests

- I focus on deep generative modeling and trustworthy AI. Specifically, I am working on controllability, robustness, and multimodality in foundation models. My work also emphasizes the curation of more informative and comprehensive data.

- Please feel free to reach out by email. I am open to discussing emergent directions, potential collaborations, and opportunities.

Recent Works

- Full publication list can be found here.

|

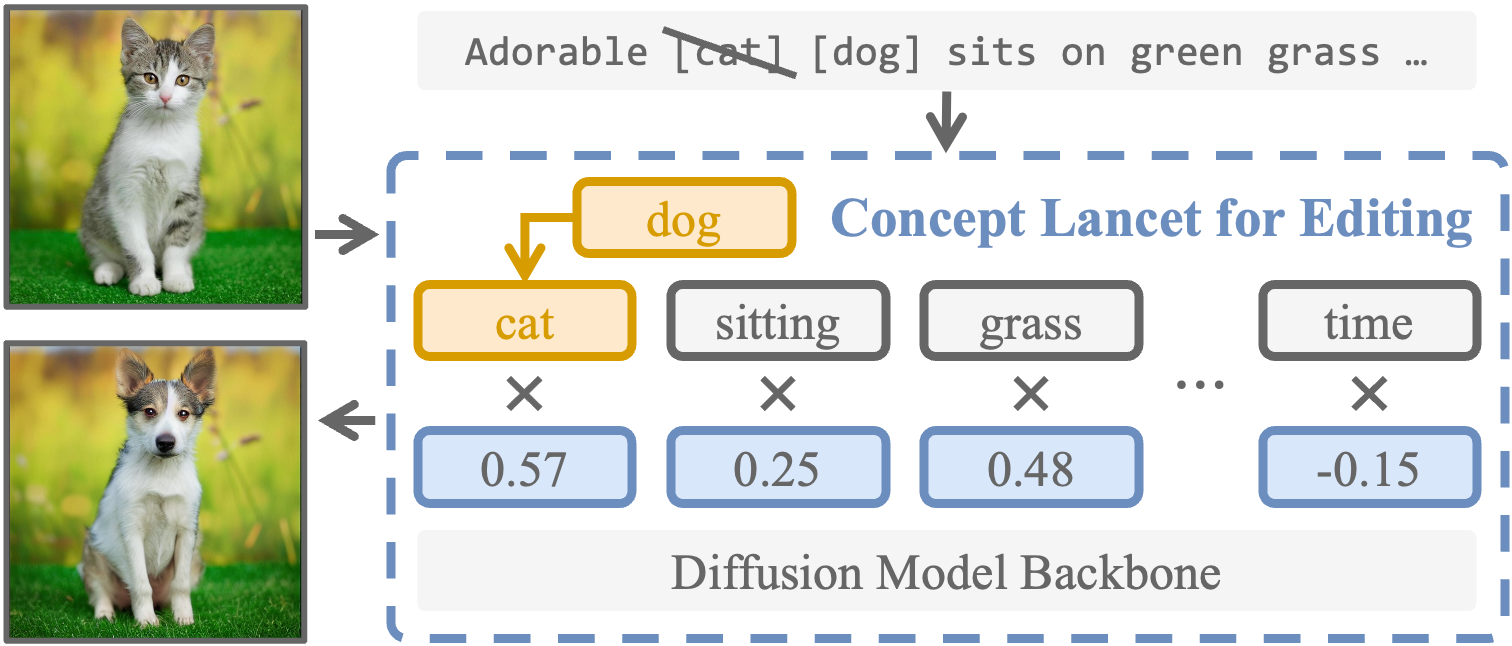

Concept Lancet: Image Editing with Compositional Representation Transplant |

|

|

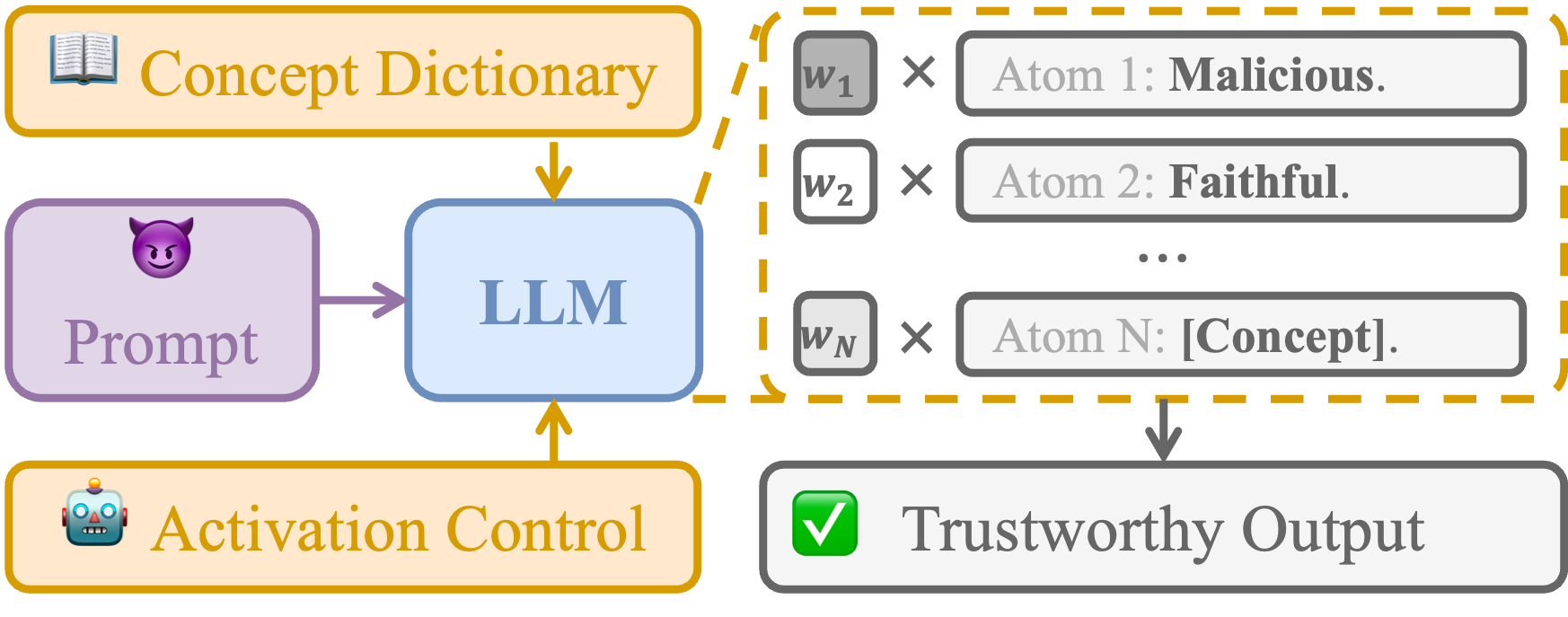

PaCE: Parsimonious Concept Engineering for Large Language Models |

|

|

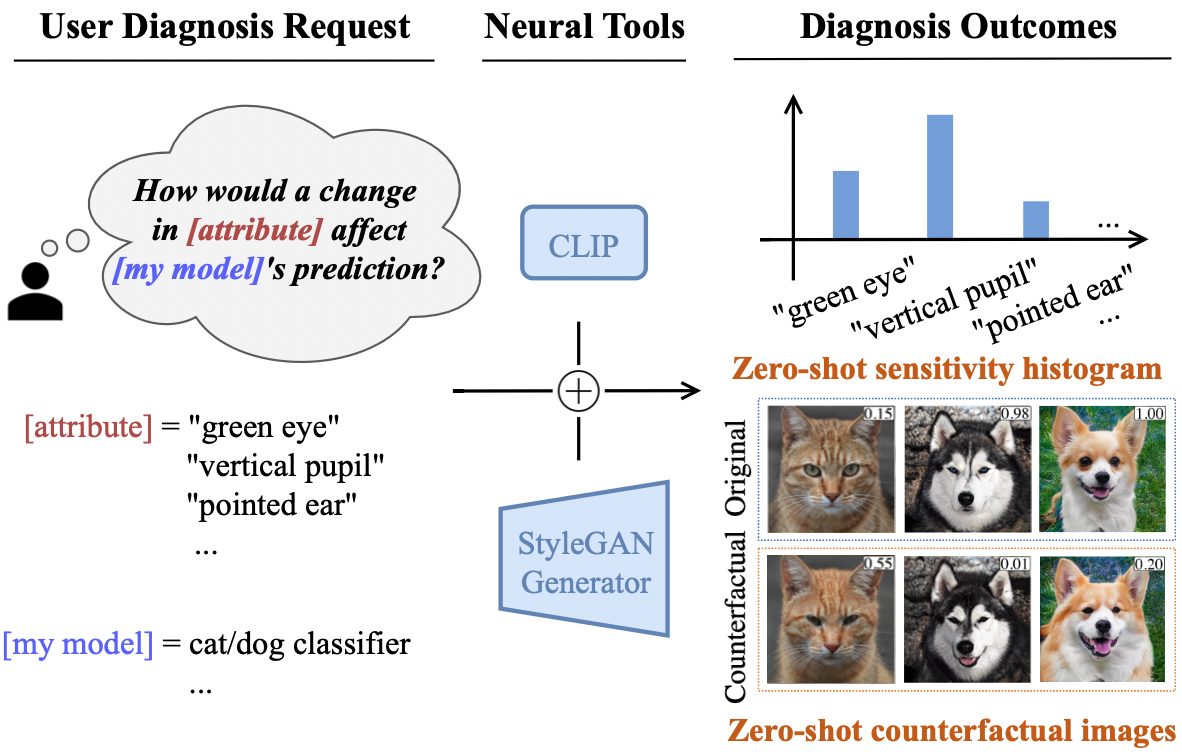

Zero-shot Model Diagnosis |

|

|

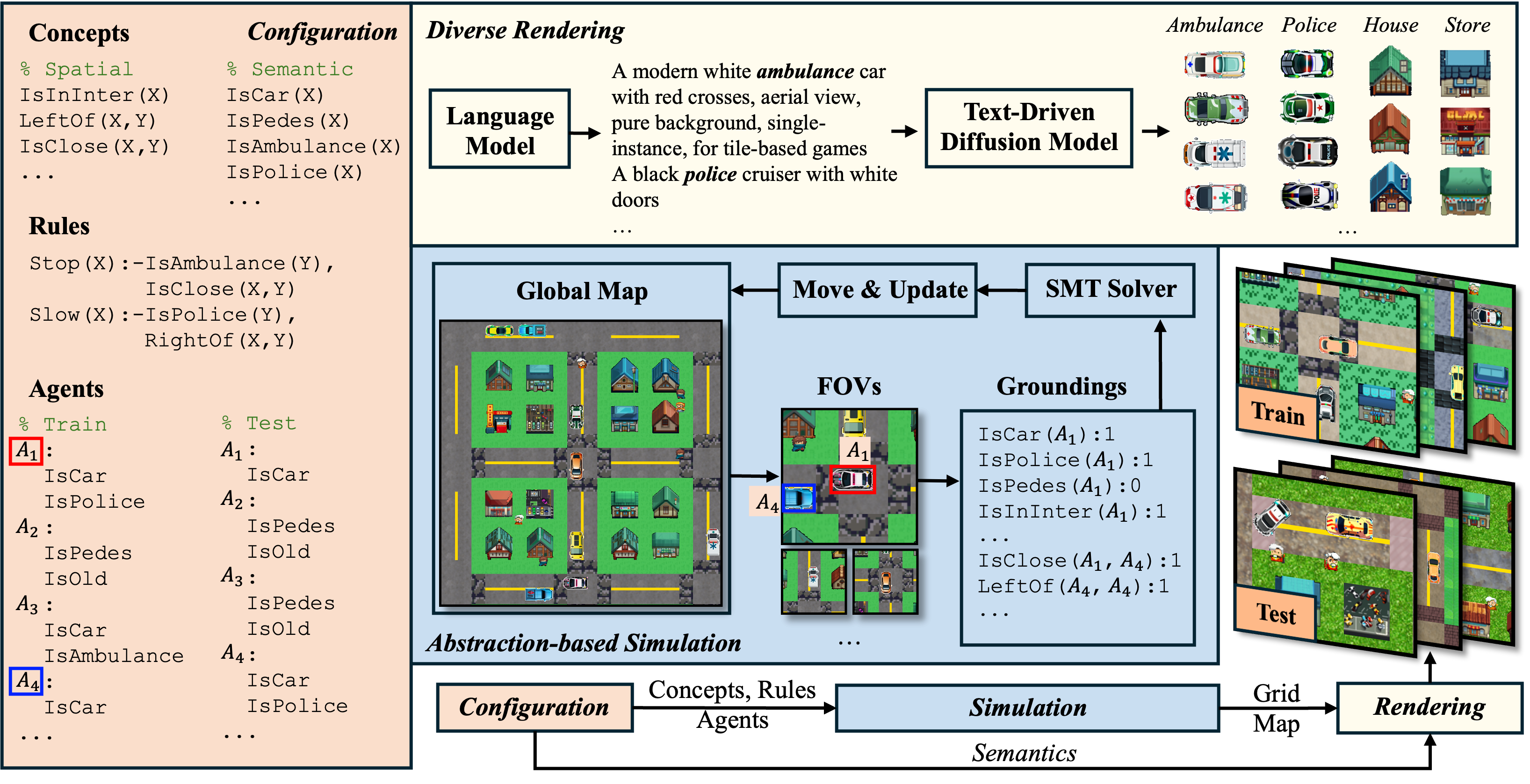

LogiCity: Advancing Neural-Symbolic AI with Abstract Urban Simulation |

|

|

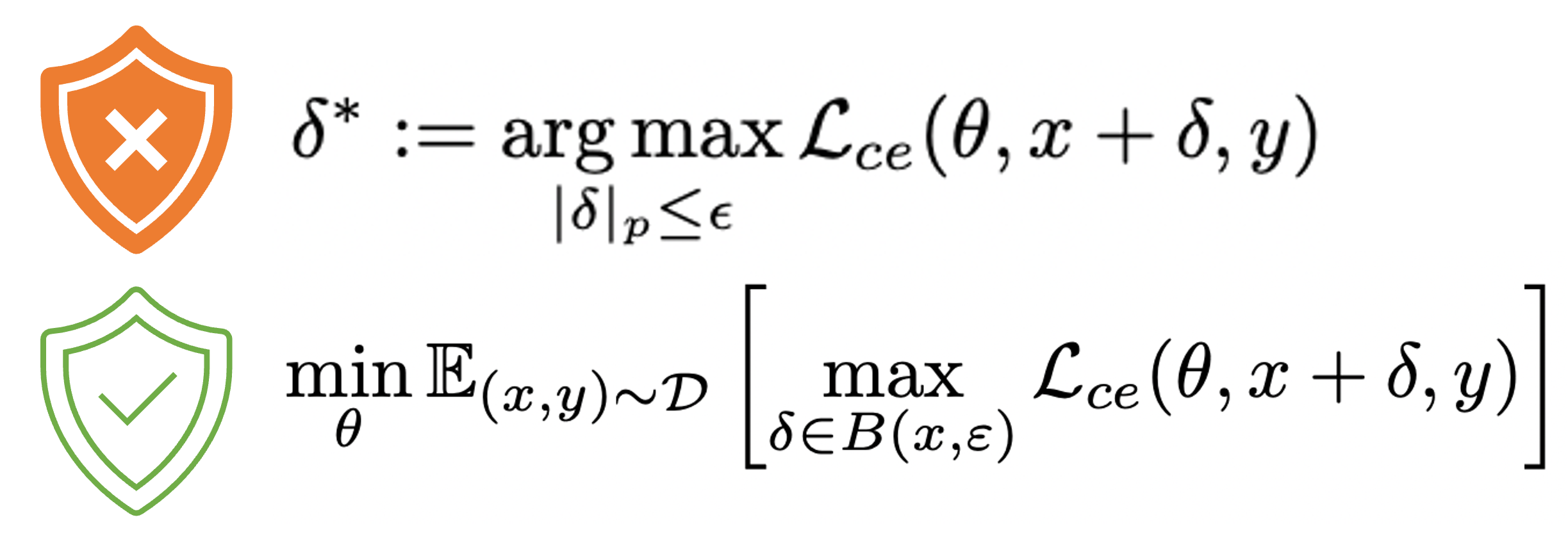

Recent Advances in Adversarial Training for Adversarial Robustness |

|

|

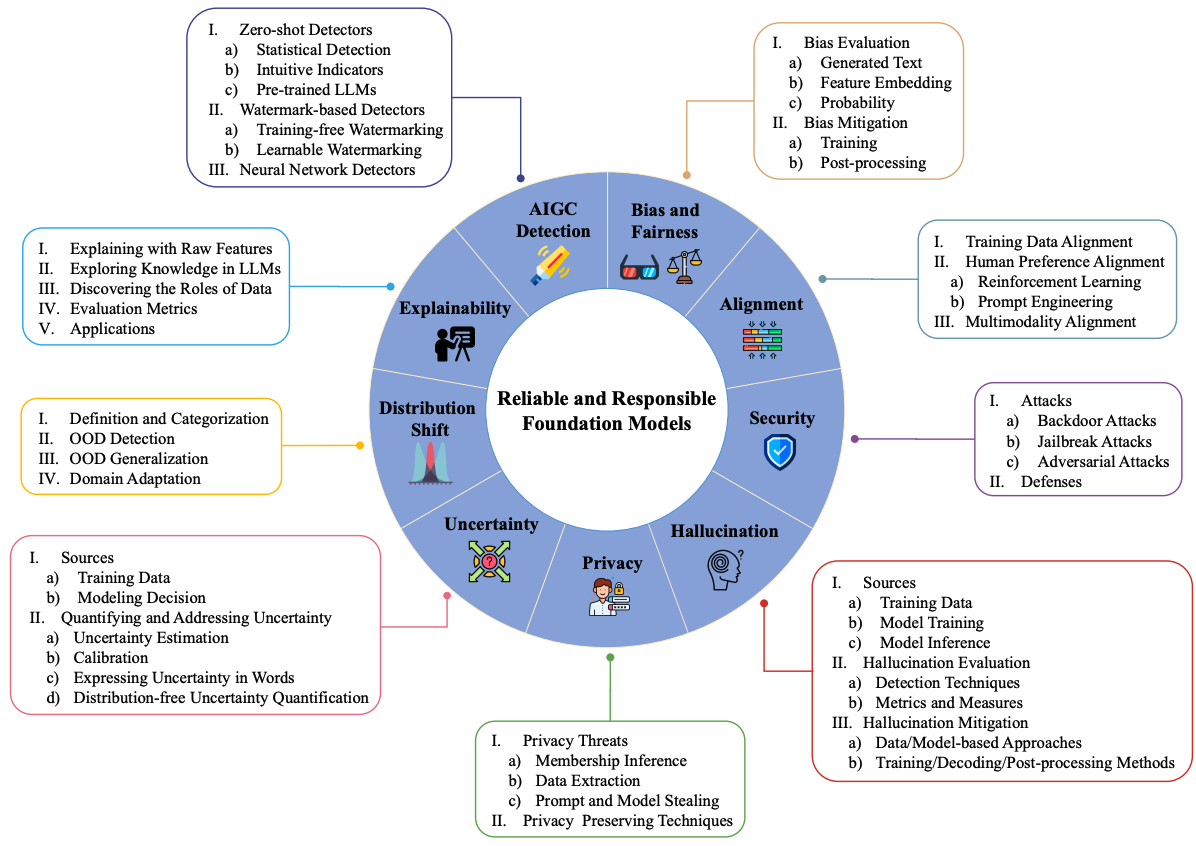

Reliable and Responsible Foundation Models |

|

|

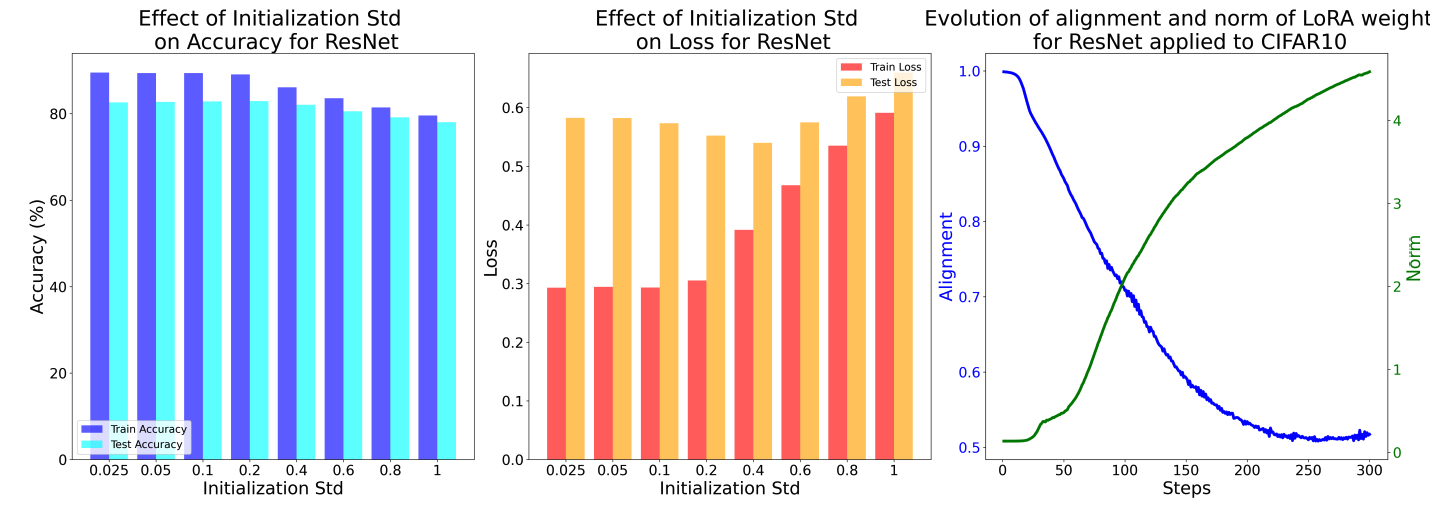

A Gradient Flow Perspective on Low-Rank Adaptation in Matrix Factorization |

|

|

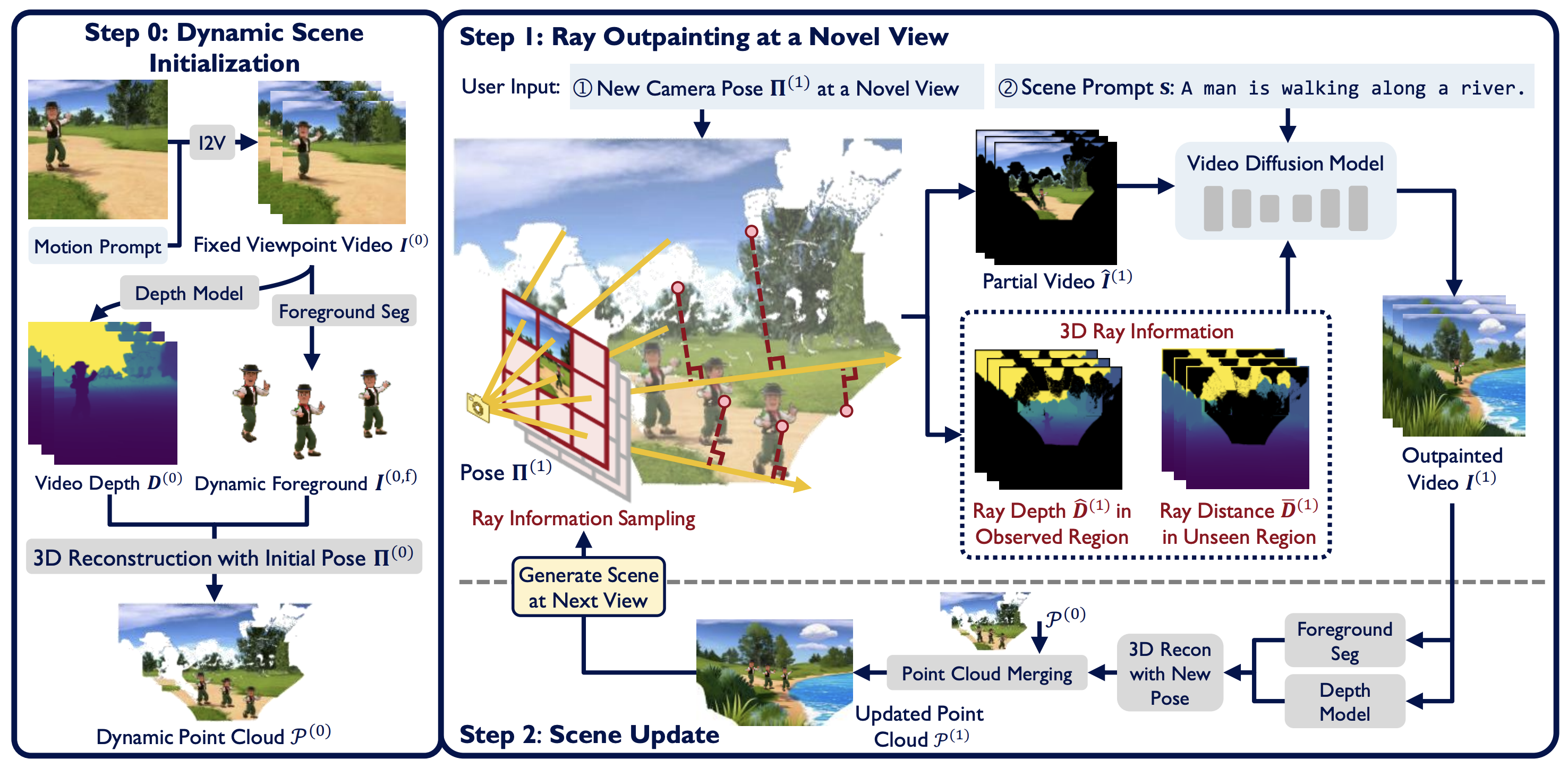

Voyaging into Perpetual Dynamic Scenes from a Single View |

|

|

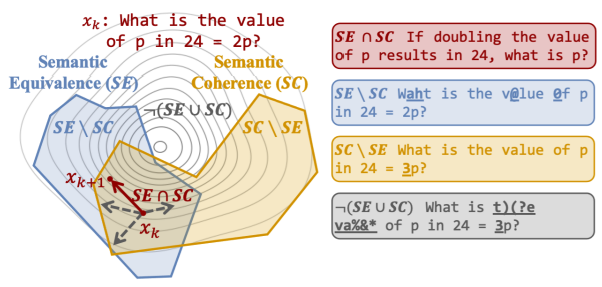

SECA: Semantically Equivalent and Coherent Attacks for Eliciting LLM Hallucinations |

|

|

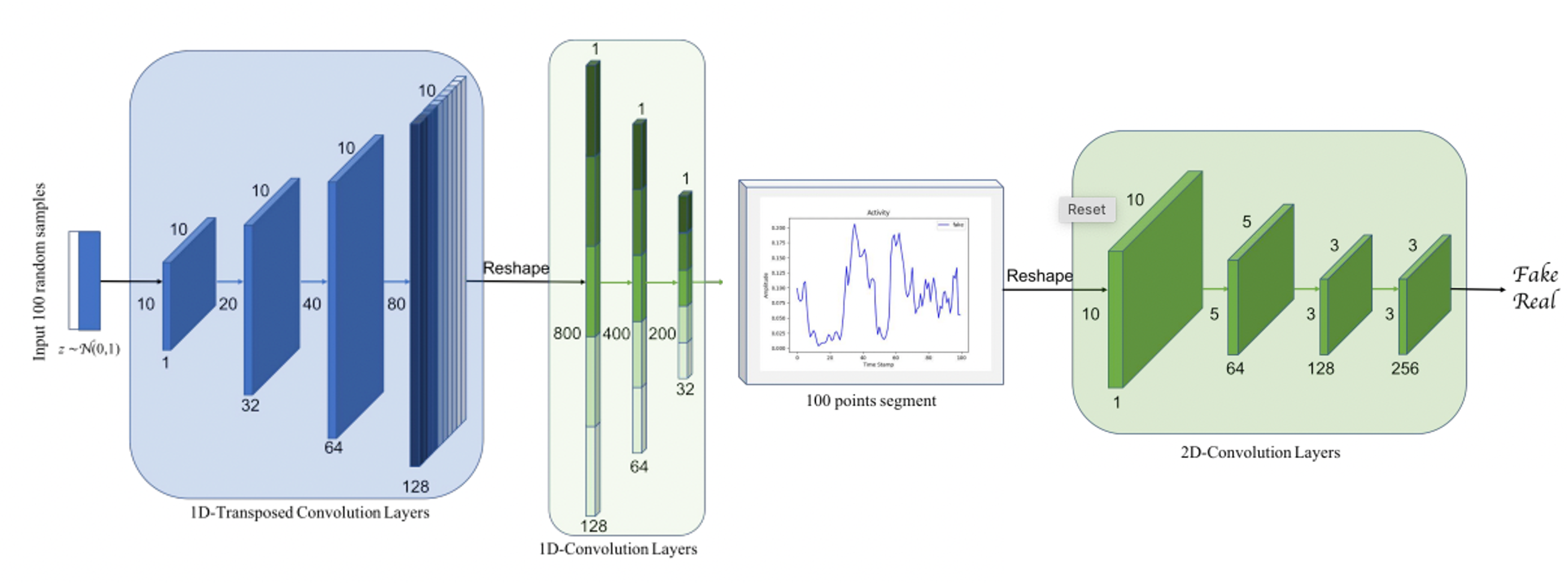

ActivityGAN: Data Augmentation in Sensor-Based Human Activity Recognition |

|

|

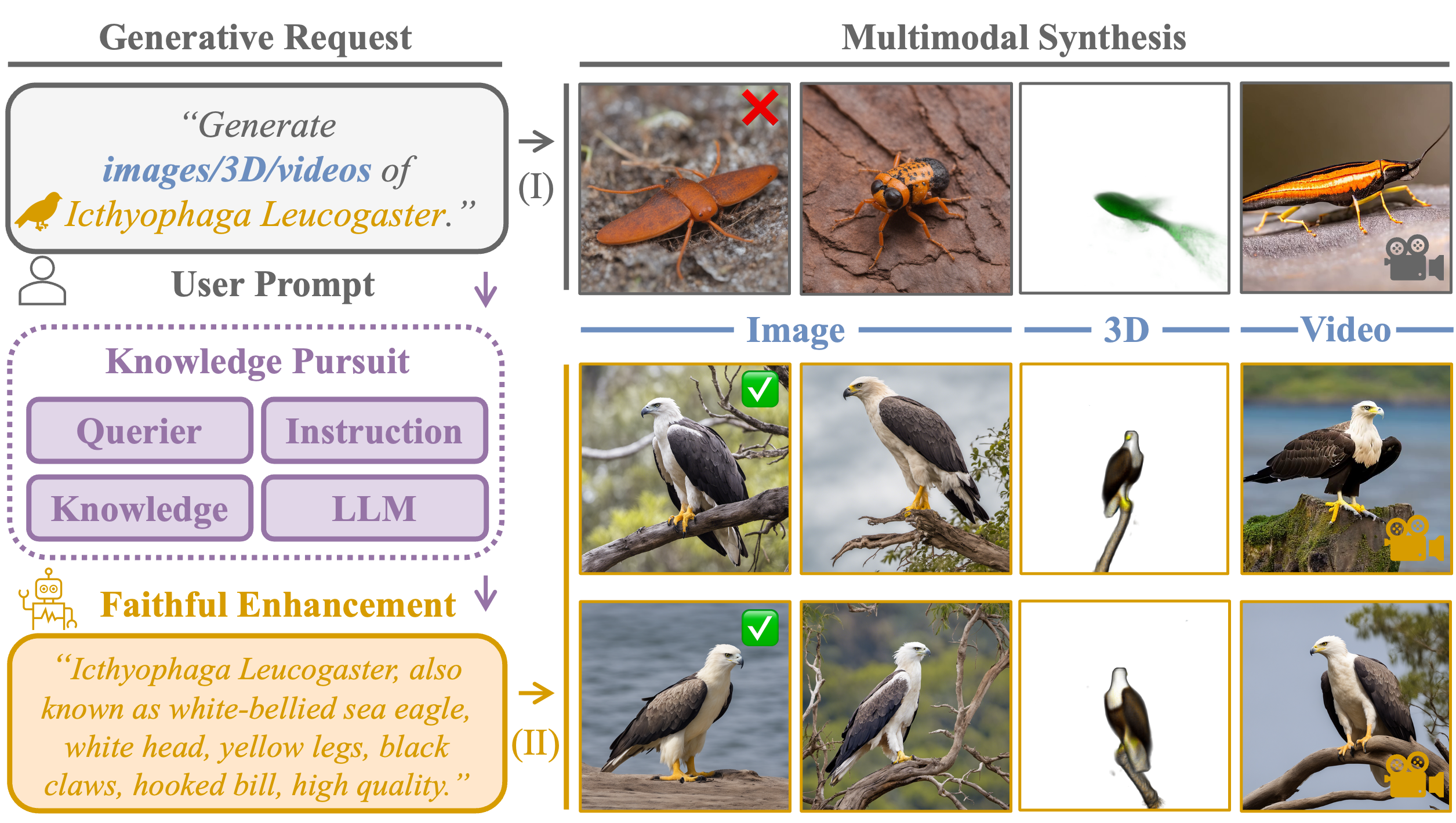

Contextual Knowledge Pursuit for Faithful Visual Synthesis |

|

|

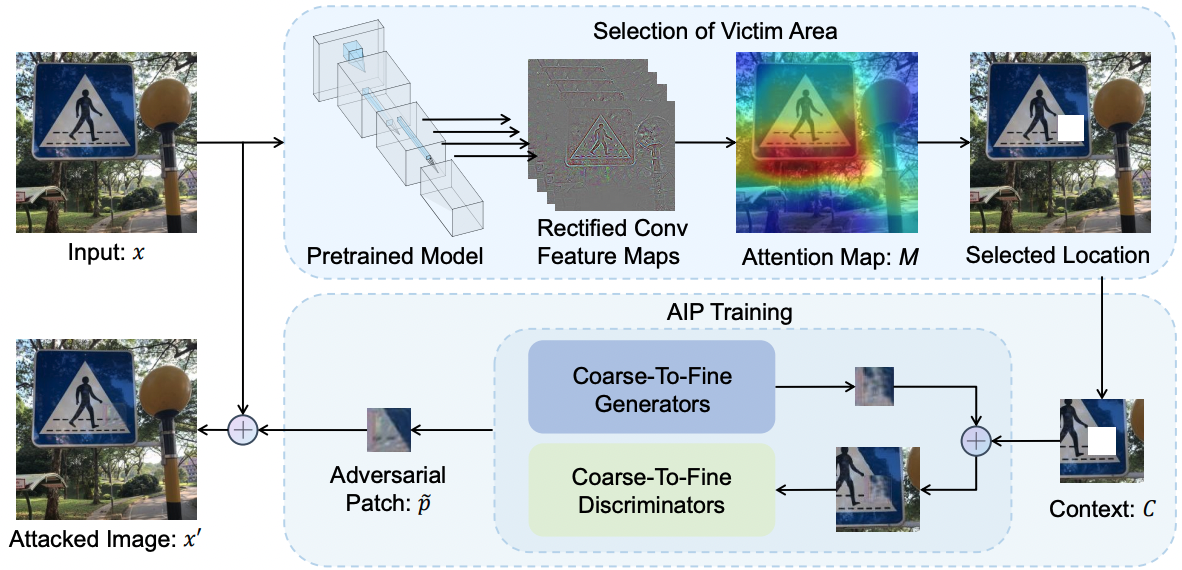

Generating Adversarial yet Inconspicuous Patches with a Single Image |

Services and Activities

- Conference Reviewer: CVPR, NeurIPS, ICLR, ICML, ICCV, EMNLP, ACL ARR, AISTATS, AAAI, ECCV.

- Journal Reviewer: TMLR, Neurocomputing, TNNLS.

- Organizer: ICLR FM-Wild Workshop.

- Teaching Assistant: [Penn 23Fall] Deep Generative Models (Founding Members). [Penn 24Fall] Deep Generative Models.